Progress on flexible tactile sensors in robotic applications on objects properties recognition, manipulation and human-machine interactions

Abstract

The robotic with integrated tactile sensors can accurately perceive contact force, pressure, vibration, temperature and other tactile stimuli. Flexible tactile sensing technologies have been widely utilized in intelligent robotics for stable grasping, dexterous manipulation, object recognition and human-machine interaction. This review presents promising flexible tactile sensing technologies and their potential applications in robotics. The significance of robotic sensing and tactile sensing performance requirements are first described. The commonly used six types of sensing mechanisms of tactile sensors are briefly illustrated, followed by the progress of novel structural design and performance characteristics of several promising tactile sensors, such as highly sensitive pressure and tri-axis force sensor, flexible distributed sensor array, and multi-modal tactile sensor. Then, the applications of using tactile sensors in robotics such as object properties recognition, grasping and manipulation, and human-machine interactions are thoroughly discussed. Finally, the challenges and future prospects of robotic tactile sensing technologies are discussed. In summary, this review will be conducive to the novel design of flexible tactile sensors and is a heuristic for developing the next generation of intelligent robotics with advanced tactile sensing functions in the future.

Keywords

INTRODUCTION

Haptic perception is one of the essential sensations for human beings to collect ambient information[1]; it can distinguish multiple sensory stimuli through mechanoreceptors and thermoreceptors, and helps humans to improve grasping stability, recognize object properties and interact with the surrounding environment[2]. Haptic sensing has also been applied in robotics. Traditional robotic sensing using vision or audio devices can detect environment information, while it is difficult to extract detailed features of target objects through contactless measurement[3]. Robotics with the capacity of tactile sensing can accurately detect external force, vibration, temperature, and other contact stimuli[4]. Therefore, tactile sensing technologies have been widely utilized in intelligent robotics for stable grasping[5], dexterous manipulation[6], object properties recognition[7], and human-machine interaction[8].

Tactile sensor is the key device to convey contact stimuli into processable signals based on different sensing mechanisms, such as piezoresistive, capacitive, piezoelectric, triboelectric, magnetic, and optical sensing[9]. To imitate the somatosensory of human beings, robotics may require numerous sensors integrated with their bodies, and it is desirable for tactile sensors to simultaneously measure multi-modal signals. Thus, several types of promising tactile sensors, such as tri-axis force sensors[10], large-scale flexible sensing arrays[11], and multi-modal sensors[12], have been widely used in robotics for tactile perception. To satisfy the requirement of intelligent robotics, researchers have been pursuing high-performance tactile sensors by studying highly sensitive materials[13] and novel structural designs[14,15]. Development of flexible tactile sensors with high sensitivity, wide detection range, fast dynamic response, and desirable repeatability remains the fundamental requirement for robotic tactile perception.

With the usage of tactile sensors and advanced interactive technology, robotics can work in unpredictable and changeable spaces rather than structured environments such as production lines[16]. It is of great significance for robotics with tactile perception to perform complicated tasks and interact with external objects, especially human beings. Before conducting specific tasks, robotics are required to explore the object’s properties, such as shape, material, texture, etc.[17]. When extracting surface properties, tactile sensors show significant advantages over traditional vision and audio devices, and they can also measure the object global or even internal properties. Besides, in robotic grasping and dexterous operation tasks, grasping stability need to be carefully considered. Actually, robotics is more desired to perform interactive tasks with human beings. Based on interaction level, human-machine interactions (HMIs) can be classified into human beings’ parameters measuring[18], input interface for robotic controlling[19], and close-looped duplex interaction with sufficient feedback[8].

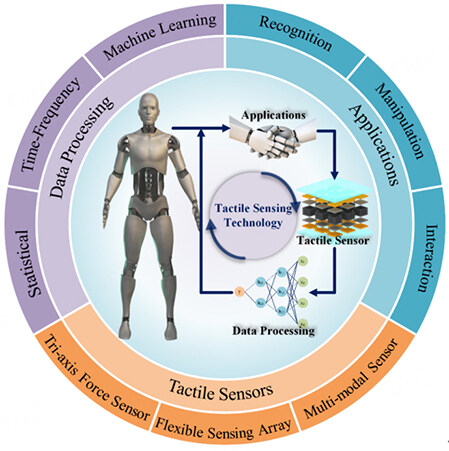

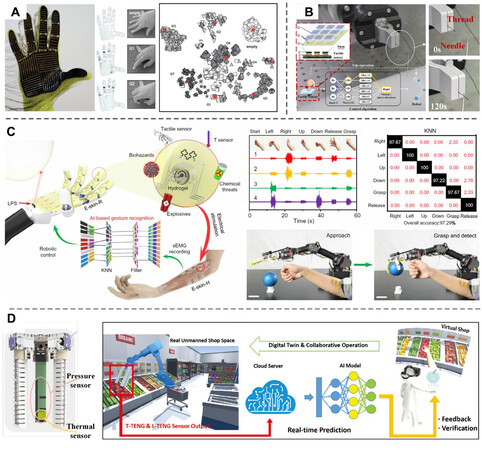

This study provides an overview of the latest progress of tactile sensing technologies and their applications in intelligent robotics, as shown in Figure 1. Section “REQUIREMENTS OF TACTILE SENSING IN ROBOTICS” summarizes the significance and requirements of tactile sensors in robotic applications. Section “PROGRESS OF FLEXIBLE TACTILE SENSORS FOR ROBOTICS” discusses the working principle as well as the characteristics of several promising tactile sensors. Then, Section “APPLIED TACTILE SENSORS IN ROBOTIC APPLICATIONS” discussed tactile sensing technologies, especially data processing methods and their applications. Finally, Section “SUMMARY AND FUTURE PROSPECTS” summarized the challenges and prospects of robotic tactile sensing technologies.

REQUIREMENTS OF TACTILE SENSING IN ROBOTICS

Robotics are generally worked in a structured environment where the ambient parameters are describable and predictable. With the development of automatic and intelligent devices, it is necessary for robotics to interact with target objects and adjust to suit the varying environment[16]. Tactile sensors can measure the contact stimuli and provide a detailed demonstration of the ambient environment, which assists the robotics in working efficiently in many scenarios, such as domiciliary service[20], rehabilitation therapy[21], industrial production[22], and even underwater exploration[23]. Robotics with tactile sensing abilities can mainly conduct three types of tasks: information exploration, movement manipulation, and human-machine interactions (HMIs). To extract tactile features of objects, robotics is usually required to contact the target objects and perform exploration actions such as pressing, grasping, sliding, and pulling[24]. Robotic movement manipulation aims to complete a specific movement trajectory and manipulation tasks based on the exploration results, which are usually completed by robotic grasping. Combining the former two tasks, HMIs require robotics to gather commands or information from human beings and perform immediate feedback. All these tasks require robotics to integrate with high-performance tactile sensing devices.

As the critical device for robotic haptic sensing, tactile sensors are required to have certain functional characteristics according to specific robotic tasks and applications. Contact force sensing is one of the most intuitive tactile information[25], and it is essential for a robot to measure external force and pressure to conduct manipulation or interactive tasks. Recently, it has been promising to develop tri-axis force sensors that can detect both normal and shear forces simultaneously, which can be applied in interactive robotic missions like sliding detection, frictional coefficient measurement, and smart input devices[26,27]. In addition to force sensing, it is desirable for robots to detect other tactile stimuli, including strain, temperature, humidity, and proximity[28]. Multimodal tactile sensors can provide robotics with a more distinct illustration of the ambient environment, and using data fusion technology can extract additional features, which can improve the accuracy of interactive tasks, especially object recognition[29]. Except for the sensing modality, the amount and arrangement of tactile sensors should also be carefully considered. Tactile sensors with one sensing unit for single-point sensing may no longer be desirable for the increasing demand of intelligent robotics, which calls for significant requirements of the distributed tactile sensor array. The high spatial resolution arrays would be suitable for subtle stimuli perception similar to human somatosensory[30], and a large area tactile sensor array can cover almost the entire body of robotics to act as electronic skin[31].

To obtain accurate information from objects and the ambient environment, the sensing performance of tactile sensors needs to be carefully designed according to specific applications. Since different robotic operation tasks may have different performance requirements, it is not very meaningful to summarize a general performance target[9]. For instance, tactile sensors mainly exploring environment conditions and object properties are generally required to have a wide sensing range, high stability, and quick dynamic response, which would be more suitable for diverse applications[32]. For daily manipulation, the grasping force is generally less than 10 N[20] and no higher than 30 N, and the response time is preferably less than

Sensing performances and robotic applications of several novel tactile sensors and commercially available products

| Time | Sensor characteristics | Sensing principle | Sensitivity | Detection range | Response time/frequency | Cyclic stability | Applications |

| 2002 | TactArray (Pressure Profile Systems)[35] | Capacitive | -- | 0.01-552 MPa | -- | -- | Attendant care for the elderly (2009)[36] |

| 2004 | OFET tactile sensor[37] | Piezoresistive | -- | 30 kPa | -- | > 2,000 | Tactile map |

| 2008 | BioTac (SynTouch)[38] | Piezoresistive (F) Thermal-resistive (T) Hydroacoustic (V) | -- | 50 N (F) 75 °C (T) ±0.76 kPa (V) | 1,040 Hz (F) 22.6 Hz (T) 10-1,040 Hz (V) | -- | Texture discrimination (2012)[39] Tool manipulation (2018)[40] |

| 2009 | Gelsight (Gelsight)[41] | Visual-tactile | ~6.67 N/mm (Fn) ~6.33 N/mm (Fs) | 0.05-5 N (Fn) 0.05-2.5 N (Fs) | -- | -- | Pose estimation (2014)[42] Hardness sensing (2017)[43] |

| 2010 | Micro-pyramid sensor[44] | Capacitive | 0.55 kPa-1 | 0.003-35 kPa | ~500 ms | 10,000 | Insect sensing |

| 2011 | Optoforce (OnRobot)[45] | Optical | ~20 N/mm (Fn) ~6.67 N/mm (Fs) | 40 N (Fn) ±10 N (Fs) | -- | -- | Object center of mass estimation (2017)[46] |

| 2011 | Epidermal electronics[47] | Piezoresistive (EP, S) Thermal-resistive (T) | GF = 1.3 (S) ~0.55 Ω/°C (T) | 10% (S) 120 °C (T) | -- | 1,000 | Human electrical signal measurement |

| 2012 | TakkTile (RightHand Robotics)[48] | Barometric | 1382.2 counts/N (4 mm) | 4.4 N (10 mm) | 16 ms | > 1,000 | Grasping force comparison (2018)[49] |

| 2013 | Triboelectric sensor[50] | Triboelectric | 0.31 kPa-1 | 0.002-12.5 kPa | < 5 ms | 30,000 | Tactile map |

| 2014 | Multi-modal electronic skin[51] | Piezoresistive (F, S) Thermal-resistive (T) Capacitive (H) | 0.41% kPa-1 (F, S1) ~3.3 mV/°C (T, S6) 0.08%/% (H) | 200 kPa (F, S1) 30% (S, S6) 25-55 °C (T) 10%-60% RH (H) | -- | -- | Prosthetic skin and nervous interface |

| 2018 | Hierarchically pattern e-skin[52] | Capacitive | 0.19 ± 0.07 kPa-1 (Fn) 3.0 ± 0.5 Pa-1 (Fs) | 0.5-100 kPa | ~224 ms | 30,000 | Robotic force control |

| 2019 | Tactile glove[34] | Piezoresistive | -- | 0.03-0.5 N | -- | 1,000 | Human grasping signatures recognition |

| 2019 | IZO-based multi-modal sensor[53] | Piezoresistive | GF = 2.11 ± 0.13 (S) α ≈ -5% (T) | 30% (S) 28.5-45 °C (T) | 34.7 μs (FET) | 2,000 (FET) | Human-machine interaction |

| 2020 | Quadruple tactile sensors[29] | Thermal-resistive | 117 mV/kPa (F) 0.0017 °C-1 (To) 0.0016 °C-1 (Te) | 80 kPa (F) 25-55 °C (T) | 400 ms | 500 | Garbage sorting |

| 2021 | Magnetic high-resolution sensor[30] | Magnetic | 0.01 kPa-1 (Fn) 0.1 kPa-1 (Fs) 0.27 kPa-1 (Fs) | 120 kPa (Fn) 16 kPa (Fs) | 15 ms | 30,000 | Robotic hand grasping |

| 2022 | Physicochemical sensor[54] | Piezoresistive (F) Thermal-resistive (T) | ~0.54 A/kPa (F) ~-0.001 °C-1 (T) | 0.1 kPa (F) 25-45 °C (T) | -- | 1,000 | Robotic hand control and feedback |

PROGRESS OF FLEXIBLE TACTILE SENSORS FOR ROBOTICS

Tactile sensing principles

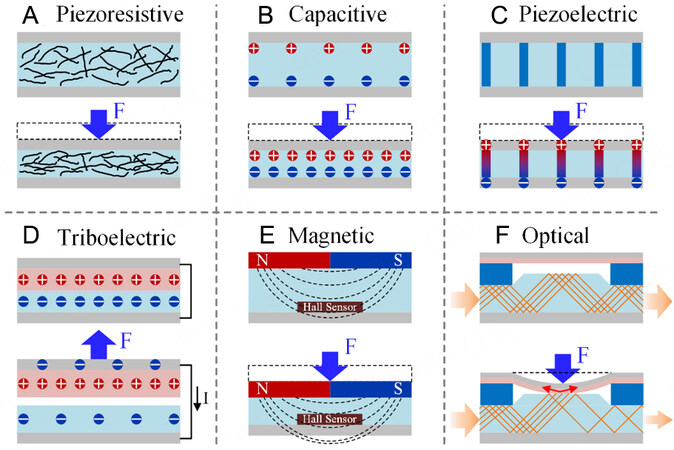

Tactile sensors can transmit mechanical stimuli into electrical signals, which can be further analyzed by the microprocessor in robotics for feature extraction. Contact force and pressure are generally considered the most intuitive tactile information, which needs to be accurately detected during robotic grasping and manipulation applications[25]. Typically, there are mainly six sensing principles for the commonly designed tactile force sensors, as shown in Figure 2. Among numerous tactile force sensors, piezoresistive, capacitive, piezoelectric, and triboelectric sensors have been intensively studied and utilized[55-57]. The piezoresistive-based tactile sensor can measure the force changes based on the resistance variation caused by sensing elements’ deformation[58], tunneling effects[59], or contact principle[60]. The capacitive-based tactile sensor can be simplified as a parallel-plate capacitor, which detects forces by the changes of parallel-plate distance[61] or overlapping area[62]. Both piezoresistive-based and capacitive-based sensors have simple structural designs and readout circuits, and the scalability of sensitivity and detection range makes them suitable for multiple robotic scenarios, from dexterous manipulation to collision detection[14,63]. Piezoelectric and triboelectric-based tactile sensors have been receiving widespread attention due to their outstanding ability of self-powering and dynamic force sensing[64]. The piezoelectric sensor generates mutually exclusive charges when piezoelectric sensing elements are subjected to external force[65], while the triboelectric sensor can measure voltage induced by surface contact of materials with different triboelectric polarities[50].

Figure 2. Schematic illustrations of tactile sensing principles: (A) Piezoresistive; (B) capacitive; (C) piezoelectric; (D) triboelectric; (E) magnetic; (F) optical.

Several other types of tactile sensors have been reported in the past decade. The magnetic tactile sensor can measure the force and strain based on Hall effects[66], giant magneto-resistance (GMR) effects[67], or electromagnetic induction principle[68]. This type of sensor is highly sensitive to mechanical stimuli, and it is also competitive for a magnetic sensor to measure tri-axis force in one unit[69]. The optical sensor detects the force-induced changes of light intensity or wavelength based on intensity modulation[70] and fiber Bragg grating (FBG) technology[71], which is immune to electromagnetic interference, and light signals have linear responses and high spatial resolution. Besides, other sensing mechanisms including ultrasonic[72], ionic[73], and barometric[48] have also been reported for the design of tactile sensors.

Pressure and tri-axis force sensor

Force and pressure sensing can be considered the most significant capacity for tactile sensors in robotics. Many object properties including rigidity, weight, and shape can be extracted by analyzing the pressure signals, and it also gives intuitional tactile feedback for manipulation tasks and human-machine cooperative missions. Numerous advanced materials have been applied to tactile sensors to improve flexibility[13,74]. For the sensor substrate, materials like non-elastic polymer films (PET, PI, PEN, etc.), chemically cross-linked elastomers (PDMS, Ecoflex, etc.), physically cross-linked elastomers (SBS, TPU, etc.), and some artificial or natural substrates (fabric, paper, cellulose, etc.) have been reported. For the conductive materials, we can use metallic-based composites (Au, Ag, Copper, etc.), carbon-based composites (Graphene, CNTs, CBs, etc.), liquid metals (Galinstan, EGaIn, etc.), conductive polymers (PEDOT: PSS, PPy, etc.), ionic hydrogels (alginate-, PVA-, gelatin-, etc.), and some other emerging materials (MXenes, etc.). In addition, researchers also make significant progress on the structure design of tactile sensors.

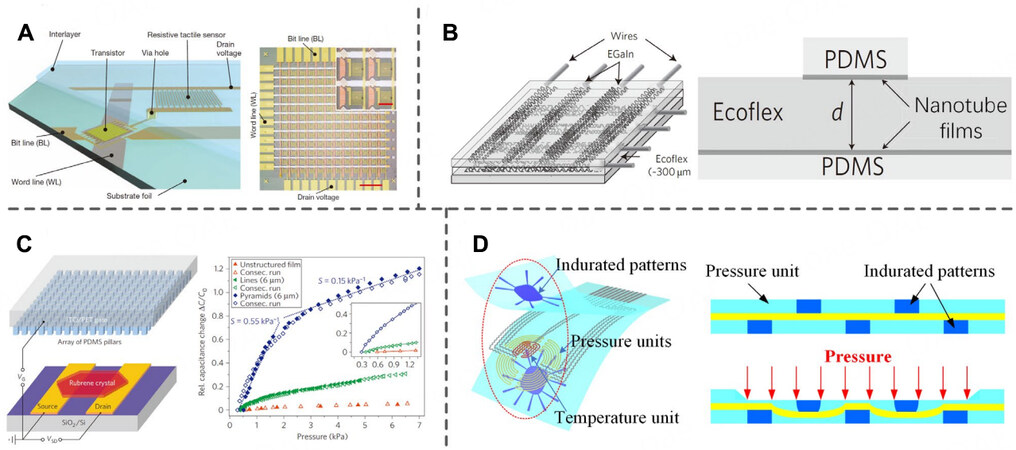

Structural design of the tactile sensor is critical to improving its sensing performance for pressure detection. Since transistors can efficiently amplify electric signals and reduce crosstalk[75], combining the tactile sensing elements with large-scale transistor arrays is attractive for the design of tactile sensors. Figure 3A shows the structure of pressure-sensitive units connected with the electrodes of transistors[76], and some other sensors also directly fabricated the transistor arrays with force-sensitive materials[77]. However, manufacturing of laminated structures is complicated, and sometimes the transistor elements are rigid. Another commonly used structure for pressure sensing is the laminated structure with the dielectric or sensitive layers sandwiched by two electrodes [Figure 3B][78]. This structure generally has a simple fabrication process with multiple sensing mechanisms and high flexibility, which is more suitable for large-scale pressure sensor arrays with high compliance for curved surfaces[79]. However, both the sensing performances of the transistor-related or sandwiched structure are limited by the intrinsic properties of sensitive materials. To improve the sensing performance, the sensitive layer or electrodes are decorated with microstructures

Figure 3. Structural design of pressure sensors: (A) resistive tactile sensor connected with the electrode of transistors (reproduced with permission[76]. Copyright 2013, Springer Nature); (B) capacitive laminated pressure sensors with Ecoflex dielectric and carbon nanotube electrodes (reproduced with permission[78]. Copyright 2011, Springer Nature); (C) PDMS with micro-pillars used in pressure sensitive OFET and its sensitivity response curves (reproduced with permission[44]. Copyright 2010, Springer Nature); (D) fully elastomeric pressure sensor with fingerprint-shaped sensitive material and interlocked sawtooth structure (reproduced with permission[88]. Copyright 2020, American Chemical Society).

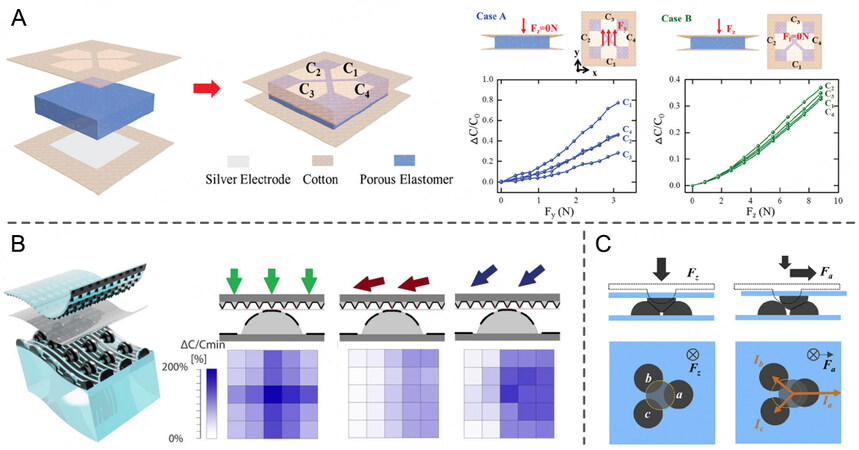

Actually, pressure and normal force sensing are far less to meet the requirements for most robotic tasks. Without shear force and force direction information, robots can hardly extract surface texture, especially friction coefficient and roughness[90], and it is relatively difficult to achieve stable grasping during complex and dexterous manipulation or movement. Thus, researchers have been working on the structural design of force direction or tri-axis force sensors. Among them, the combination of four elements distributed in an array is the most commonly utilized structure for tri-axis force sensing, and several transduction mechanisms have been reported using this structure[91-95]. By simultaneously analyzing the signal differences between the sensing elements, an arbitrary force can be decoupled into the components of Fx, Fy, and Fz

Figure 4. Structure of tactile sensor for tri-axis force and pressure direction sense: (A) porous dielectric elastomer-based sensor with four capacitive units for tri-axis force detecting, the arbitrary force can be decoupled into the components of Fx, Fy, and Fz (reproduced with permission[10]. Copyright 2021, Royal Society of Chemistry); (B) hierarchically patterned e-skin to detect the direction of pressure by analyzing tactile maps from multiple sensing elements (reproduced with permission[52]. Copyright 2018, The American Association for the Advancement of Science); (C) flexible piezoresistive tactile sensor based on interlocked hemisphere structure for tri-axis force sensing (reproduced with permission[97]. Copyright 2021, Elsevier).

Distributed tactile sensor array

In most cases, single-point tactile sensors can only provide limited detected information about the ambient environment. It is necessary for robotics to equip large-scale and multi-point electronic skins (E-skins) distributed all over the body for intelligent applications[22]. For grasping manipulators, a tactile sensor array with high spatial resolution is urgently required to capture tiny stimuli and conduct dexterous interaction tasks. A flexible distributed sensor array generally has good structure scalability, making it more suitable for applications such as large-scale and high spatial resolution scenarios. Besides, multi-point detection may generate much tactile data, which can improve signal acquisition efficiency and provide cross-validation between different sensing elements to improve the signal-to-noise ratio[33]. Combined with advanced machine learning (ML) algorithms, data collected through a tactile sensor array can also enhance the system’s ability to extract high-dimensional features[100]. Flexible distributed sensor array has been widely applied in robotic electronic skins, object shape detection, grasping gesture recognition, etc.[11].

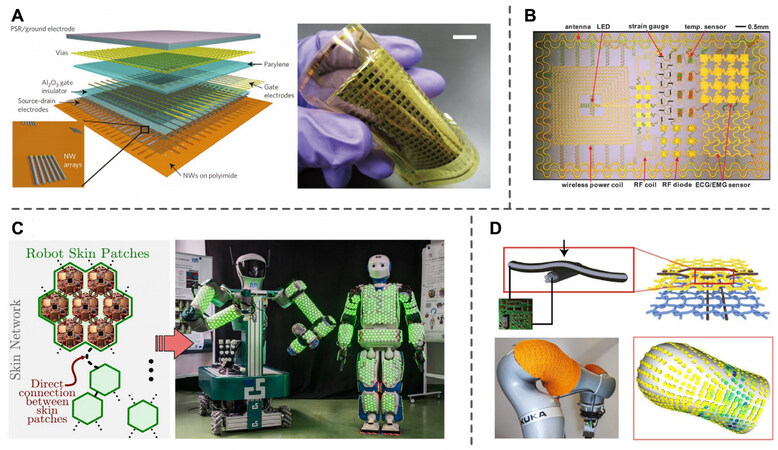

Due to the advancement of soft sensing materials and fabrication technology, researchers have proposed several types of flexible sensor arrays for the consideration of robotic body wearing. The tactile matrix-based sensor with well-organized electrodes and sensitive elements printed or deposited on a flexible substrate is one of the most commonly used array structures, as shown in Figure 5A[101]. High spatial resolution tactile imaging can be achieved by micro micromanufacturing[102] or advanced algorithms[103]. It can be well wrapped on the finger or arm of the robotic manipulator, but its flexibility is limited when placed on an undevelopable surface. Discarding the non-stretchable substrate, some researchers connected sensitive elements with filamentary serpentine nanoribbons [Figure 5B][47] or waveform nano-membranes[104], which renders the tactile sensor array to be mounted onto complicated surfaces. In addition, robotic skin networks in combination with multiple sensitive cells have also been reported in Figure 5C[22]. Since the sensitive patches can be easily connected and replaced, the module design approach would be convenient for operation and maintenance. Researchers also make significant processes in conformal adhesion using kirigami methods[105] or fractal theory[106]. Besides, tactile textiles with sensitive elements knitted into flexible fiber [Figure 5D][107] also show great advantages in large-scale tactile sensing on arbitrary 3D geometries. With ML algorithms, it can recognize grasping gestures or manipulation movements[34].

Figure 5. Distributed tactile sensor array: (A) parallel nanowire-based pressure array on a flexible substrate (reproduced with permission[101]. Copyright 2010, Springer Nature); (B) flexible sensing elements connected with filamentary serpentine nanoribbons (reproduced with permission[47]. Copyright 2011, The American Association for the Advancement of Science); (C) module design of tactile patches, which can be connected and adhere on robotic body (reproduced with permission[22]. Copyright under a Creative Commons License); (D) tactile textiles with multiple sensing elements knitting into a flexible fiber that can be covered on robotic arm (reproduced with permission[107]. Copyright 2021, Springer Nature).

Multi-modal tactile sensor

In addition to force or pressure sensing, it is necessary for robots to detect other tactile stimuli such as strain, vibration, temperature, humidity, and proximity[108], which can provide a more distinct illustration of the characteristics of the surrounding objects and environment. Among these stimuli, thermal parameters can not only provide clinical information for a variety of diseases but also improve the object recognition rate by calculating thermal conductivity, which assists robotics in establishing a perception capacity similar to human beings[108]. Compared with single-mode measurement, multi-modal tactile sensors can detect multiple tactile information simultaneously, which is far more efficient in tactile signal collecting. Besides, technologies of multi-data fusion[12] can help to extract additional features by high-dimensional analysis. It can increase the success ratio of object recognition in robotic applications[7], and promote emotional communication between humans and robots. However, the structure of multi-modal tactile sensors is sometimes complicated, and it is still challenging to accurately extract multi-modal tactile stimuli without crosstalking. According to the structural design, multi-modal tactile sensors can be classified into three categories: centralized, distributed, and hybrid.

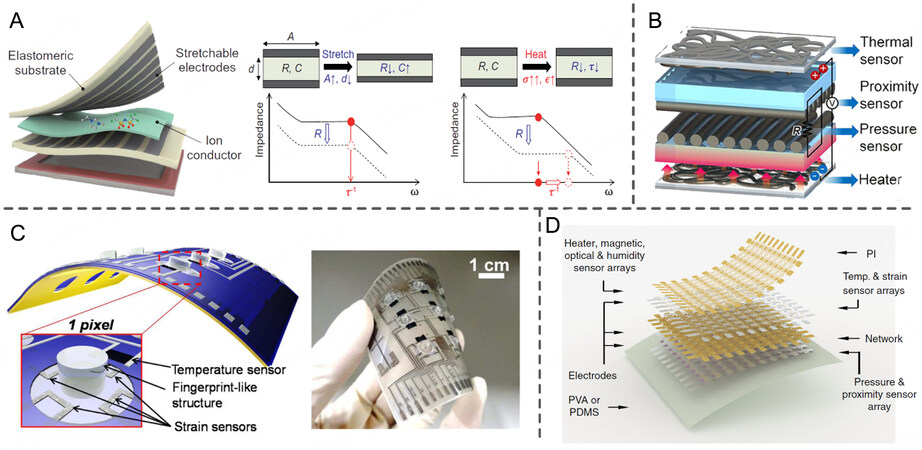

Centralized sensors: Centralized sensors can use one sensing unit to distinguish multi-modal information[109,110]. As shown in Figure 6A, You et al. presented an ionic receptor with a simple electrode-electrolyte-electrode structure[111]. Through the ion relaxation dynamics analysis, temperature and strain can be simultaneously detected without signal interference. Some similar works differentiate mechanical stimuli such as pressure, bending, shearing, or stretching by analyzing the characteristics of the signals[112,113]. Other centralized sensors collect mutually independent signals from different measuring elements stacked together[114,115]. Seok et al. developed a four-layer multifunctional sensor capable of simultaneous sensing of temperature, pressure, and proximity, and the outputs of each element are measured independently, as shown in Figure 6B[116]. The centralized sensors are generally used in single-point sensing for dexterous manipulation and grasping due to their compact structure. But advanced decoupling algorithms need to be considered to overcome the effects of signal crosstalk[117].

Figure 6. Multi-modal tactile sensors: (A) centralized ionic receptor that can distinguish temperature and strain (reproduced with permission[111]. Copyright 2020, The American Association for the Advancement of Science); (B) four-layer stacked multifunctional sensor capable of sensing temperature, pressure, and proximity (reproduced with permission[116]. Copyright 2020, Springer Nature); (C) distributed temperature and strain sensor array (reproduced with permission[119]. Copyright 2014, American Chemical Society); (D) hybrid electronic skin matrix with stacked and distributed layout (reproduced with permission[122]. Copyright 2018, Springer Nature).

Distributed sensors: Distributed sensors utilize the planar sensing array to form a multi-modal measurement network. In general, the sensing units are designed with different structures to measure various tactile stimuli respectively[118]. Figure 6C illustrates the distributed multi-modal sensor array reported by Harada et al., in which the sensing elements of strain and temperature are distributed in different areas and work independently[119]. Besides, elements with identical structures are usually assembled to decouple normal and shear forces[60,120]. In contrast to the centralized one, the distributed multi-modal sensors are more suitable for electronic skins, which can cover the entire robotic body to create large-area interaction networks. The crosstalk is reduced due to the intrinsic isolated arrangement of sensing elements, while it is necessary to apply a high-frequency signal acquisition circuit to cope with abundant sensing units.

Hybrid sensors: Hybrid sensor combines the stacked structure and distributed arrays to measure multi-modal tactile signals[121]. Kim et al. developed an ultrathin strain, pressure, and temperature sensing array overlaid with another layer of humidity sensors, which achieves excellent performance in intelligent prostheses and peripheral nervous system interface[51]. Hua et al. presented a highly stretchable electronic skin matrix combined with a stacked and distributed layout, which can measure outside stimuli of seven types [Figure 6D][122]. Hybrid sensors are an extension of centralized and distributed multi-modal sensors, and both the signal crosstalk and abundant data acquirement should be carefully considered. In the future, it is an essential topic for multi-modal sensors to achieve more tactile information with low interference in limited space.

APPLIED TACTILE SENSORS IN ROBOTIC APPLICATIONS

Recognition of object properties

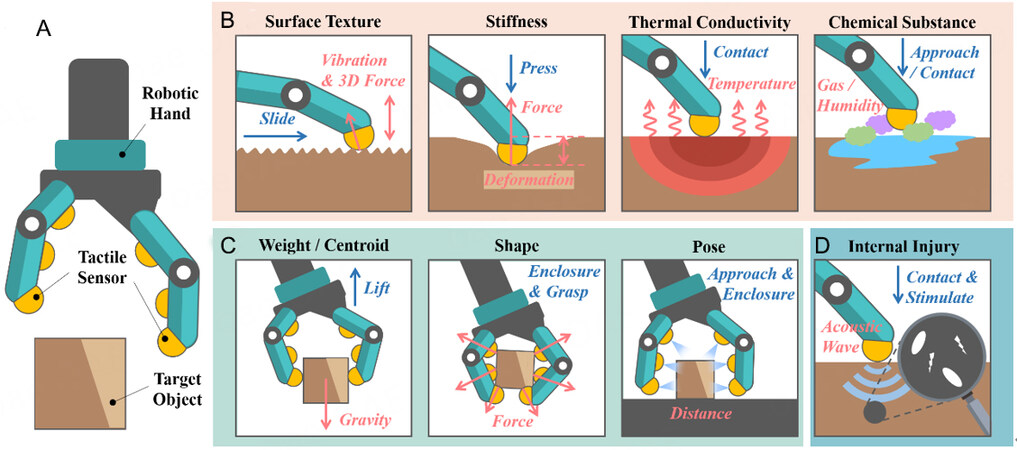

Exploring object properties is one of the most significant tasks for robotics. It is necessary for robots to gather information on the interacted objects, which can not only provide a clear view of the surrounding environment but also assist decision-making in high-level tasks such as manipulation controlling or human-machine interaction[17]. For the past two decades, image or acoustic features obtained by vision or audio devices have been widely used in robotic object recognition[123]. However, the light and sound may be obstructed and fluctuate during manipulation[3], and it is difficult to extract tactile features without contacting the target object. Figure 7A shows a robotic hand detecting object properties with tactile sensors. As for the complement of vision and audio information, tactile features can significantly enrich the description of the object characteristics, which can be commonly divided into three categories: external local properties, global properties, and internal properties. This section summarizes the principle and advanced algorithms to extract object properties in recent years, and Table 2 summarizes the processing methods and practical applications of some relative works.

Figure 7. The principle of robotic object properties recognition: (A) robotic hand with tactile sensors to grasp object; (B) detect object external local properties including surface texture, stiffness, thermal conductivity, and chemical substance; (C) detect object global properties such as weight, position of centroid, shape, and pose; (D) detect object internal properties.

Tactile sensors for object properties recognition

| Object properties | Processing methods | Practical applications | References | |

| Surface texture | FFT, bayesian exploration | Materials classification | [39] | |

| DSWT | Surface recognition Slide monitoring | [124] | ||

| STAM | Textile classification | [125] | ||

| LSTM | Textile classification Braille reading | [66] | ||

| Stiffness | k-NN, DTW | Object recognition | [126] | |

| LSTM, RNN | Health monitoring | [43] | ||

| Variation of the hertz model | [127] | |||

| Thermal conductivity | ANN | Materials classification | [128] | |

| AC method | Chronic wound management | [129] | ||

| CTD feedback circuit | Garbage sorting | [29] | ||

| Chemical substance | LPF | Sweat analysis | [130] | |

| NN | Practical surgical compensation | [131] | ||

| LPF | Human hand proximity | [132] | ||

| Inertial parameters | Cross-correlation analysis | Object recognition | [46] | |

| CoM line calculation | Grasping pose adjustment | [133] | ||

| FNN | Grasping position choosing | [134] | ||

| Shape | GP | Object shape reconstruction | [135] | |

| SVM | Object recognition | [136] | ||

| iCLAP | [137] | |||

| k-NN | [91] | |||

| Pose | TIQF | Object pose estimation | [138] | |

| CNN | [139] | |||

| CNN | Manipulation positioning | [140] | ||

| Internal properties | Sparse GPs | Liquid viscosity estimation | [141] | |

| GPIOIS | Inner-outer shapes estimation | [142] | ||

External local properties recognition

External local properties can directly describe the characteristics of the contacted region between the tactile sensor and the target object, which can hardly be measured by vision and audio sensors. Specifically, if the object is entirely homogeneous or its size is less than a sensing unit, the local properties can be similarly equivalent to global properties. Figure 7B illustrates the commonly used exploring actions and response signals to detect external properties including surface texture, stiffness, thermal conductivity, and the attached chemical substance.

Surface texture mainly represents the object superficial characteristics, such as roughness, friction coefficient, and micromorphology structures[7], and it has been used in sliding monitoring, textile recognition, or even Braille reading[124,143,144]. In general, tactile sensors measuring dynamic force and vibration are used to slide alongside the object contour, and texture features can be extracted by frequency spectrum analysis. After that, researchers can classify texture categories with advanced machine learning methods such as support vector machine (SVM), Bayes exploration, or long short term memory (LSTM)[66,145]. Besides, tri-axis force sensors can estimate the surface friction coefficient[90], and tactile-visual-based sensors are able to extract the surface micro-structure patterns[42]. Stiffness (or hardness, elasticity) is one of the most significant properties for estimating the mechanical impedance of the object. It can help robots to adjust grasping force and prevent destruction to the interacted target[146], and some can even assist interaction activities, as seen in health monitoring[147]. For precise value measurement, it is commonly extracted through the comprehensive analysis of force and deformation signals by static pressing[127]. For object classification, some use advanced algorithms such as k-Nearest Neighbor (k-NN), LSTM, and so on[43,126].

In addition to mechanical tactile sensing, thermal or chemical perception is also significant for robotic manipulation, and promising applications such as object recognition[148] and health monitoring[129] have been reported. Temperature can be considered another significant parameter when monitoring tactile behavior, which makes robotic tactile sensing more similar to human beings. Principles such as resistivity, Seebeck effect, pyroelectricity, and thermochromism have been widely applied in temperature sensing[4,108]. Furthermore, object thermal conductivity detection relies on monitoring time-varying heat flow, which combines the temperature sensor with the heat source[29]. Other external local properties such as humidity, gas concentration, or liquid component describe superficial or ambient characteristics of the objects[149]. In most cases, these properties can be measured directly or extracted after noise elimination processing such as Low-Pass Filter (LPF)[130]. Popular applications such as scenarios like health monitoring[131], baby care[51], and human proximity detection[132] have been reported.

Global properties recognition

Global properties can describe the object holistic parameters regardless of partial or superficial characteristics. In most cases, vision device shows excellent advantages in global properties sensing, but tactile methods can serve as an effective complement when operated in dimly lit or occluded environments. Besides, the fusion of vision and tactile methods can significantly increase the precision rate in object recognition[12]. Figure 7C illustrates the tactile exploring actions and signals to detect some global properties such as inertial parameters, shape, and pose.

Inertial parameters play an important role in robotic manipulation since accurate measurement of weight serves as an auxiliary estimation for force control, and grasping at the center of mass (CoM) prevents large torque applied to the manipulator[133]. Most researchers lift the target objects using the manipulator with 6D force and torque sensors, and methods such as Cross-correlation analysis[46] and feedforward neural network (FNN)[134] are used to estimate inertial parameters. Besides, CoM measurement is more accurate when combining the tactile signal with a priori estimation from the visual patterns[133].

Shape and pose provide a most intuitive impression of the interactive target, and they are traditionally detected by visual devices in most cases. A more complete description of the objects can significantly improve the robotic manipulation stability and controllability. For haptic shape perception, large-scale force arrays are always used in the grasping manipulators to generate tactile images, which can be further processed with advanced algorithms such as k-NN[91], SVM[136], and so on. Combing with vision image or kinaesthetic data, particularly joint angle, a space cloud point of the object can be further fitted using methods such as interactive closest labeled point (iCLAP)[137], Gaussian process (GP)[135]et al. For pose sensing, a more accurate estimation of object poses contributes to stable robotic manipulation. Since the action of touch is intrusive to cause rotation and translation of the object, sometimes proximity sensors are utilized to capture pose parameters[3]. It gives a more accurate pose estimation when combining vision and tactile data using algorithms including translation invariant quaternion filter (TIQF)[138] or convolutional neural network (CNN)[139]. Besides, vision-tactile devices, especially Gelsight, using advanced image processing methods have advantages in the shape and pose sensing of small objects[42].

Internal properties recognition

Internal properties describe the object inner parameters, which provide a more complete view of the anisotropic target [Figure 7D]. Traditionally, internal damage such as micro holes or cracks can be detected through acoustic and infrared devices, the principles of which are always based on excitation and echo signals[72]. Most haptic devices aim to extract object external properties, and robotic tactile sensors for internal properties measurement are less reported. Before then, researchers measured the liquid content by shaking or rolling the container, and the vibration or multi-axis force signals were further processed through GP[141] or high pass filter (HPF)[150]. Others differentiated the inner-outer shape of the objects covered with soft materials with visual and tactile data[142].

Robotic grasping and manipulation

With the development of robotic technology and industrial demands, robots are more expected to work in unstructured environments, which calls for strong environmental adaptability and complicated task processing capacity. It is promising for robotics, the multi-finger manipulators or graspers, to conduct high-level and complicated manipulation tasks such as stable grasping, dexterous operation, or even tool manipulation. This section discusses the grasping stability enhancement methods and some dexterous operating applications in robotics.

Stability control of robotic grasping

Grasping is one of the most fundamental and widely used operation movements for robotics, especially in the multi-finger manipulator or grasper[151]. It provides robots with the ability for object transferring and recognition, and it is also a premise of some non-grasp or tool manipulation tasks. The complete grasping process can be divided into pre-grasping, grasp conducting and grasp verification[17]. To perform dexterous and sophisticated manipulation tasks, it is of great significance to improve grasping stability, and commonly used methods for stable grasp include pose adaptation or adjustment, force control, and slippage detection.

The grasp adaption and adjustment is an important stability control method at the beginning of the grasping tasks. The primary purpose of this adjustment is to search for a proper grasping position or relocate the grasped target[6]. In the stage of pre-grasping, researchers can use vision or proximity sensors to roughly estimate the object shape and pose[152,153], which assists in choosing a proper grasping gesture that matches the target object, and an initial grasping position is estimated to reduce the torsional load to the grasping manipulator. Then tentative grasping is conducted and the object pose and inertial parameters are revised through tactile information, which can further adjust the grasping gesture and minimize torsional load[140]. If the initially estimated grasping is far from the expected state, it is necessary to conduct re-grasping. Otherwise, the manipulator can quickly perform an in-hand operation based on the current grasping state, which relies on the manipulator’s dexterity and operating skills.

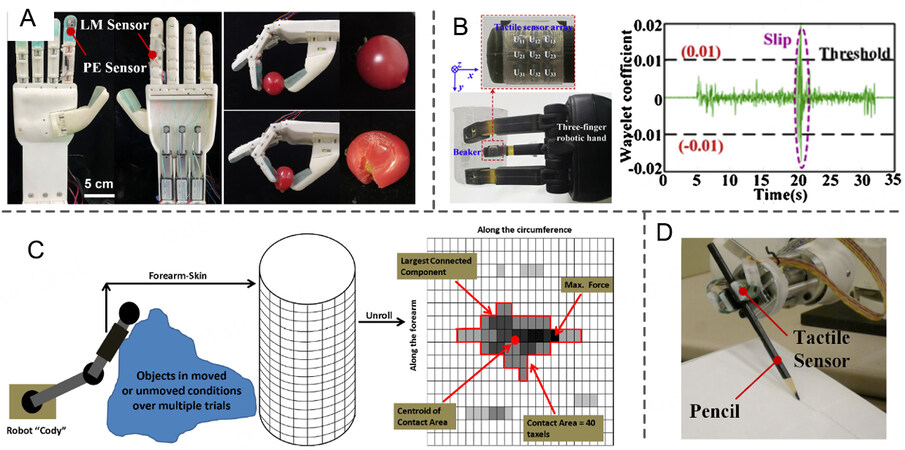

Once choosing a proper grasping gesture and position, it should give priority to considering force feedback and controlling for stable grasping[52]. First, the appropriate measurement of the object weight helps estimate the minimum grasping force, which ensures the probable lifting of the target object. Moreover, it is necessary to determine the maximum grasping force by estimating the object stiffness[146], which can prevent damage or a tight grip on the object with delicate or soft materials. Qiu et al. [Figure 8A][146] have reported a robotic hand integrated with bioinspired multisensory electronic skins. The comparative result indicates that the manipulator with force feedback can grasp tomato stably, while the one without force monitoring results in damage. Others have applied force feedback to a prosthetic hand[20], which assists disabled people in interacting with fragile or soft objects.

Figure 8. Robotic grasping stability control and dexterous manipulation: (A) biomimetic manipulator with piezoelectric sensors and the tomato grasping tasks with and without force control (reproduced with permission[146]. Copyright 2022, Springer Nature); (B) robotic grasping hand with tactile sensing arrays for slip detection (reproduced with permission[27]. Copyright 2019, Elsevier); (C) infer object hardness and velocity by pushing it with tactile sensing array (reproduced with permission[158]. Copyright under a Creative Commons License); (D) robotic gripper manipulating a pencil using tactile feedback (reproduced with permission[163]. Copyright 2014, Elsevier).

In addition, detecting object slippage is of great significance to confirm stable grasping, especially when performing dynamic manipulation tasks with varied environments or changeable target objects. For decades, several techniques for slippage monitoring have been proposed[5]. Some utilize the friction cone model to measure the surface friction coefficient[30], which can be calculated through normal and shear force. Others monitor the vibration signals and extract frequency spectral features with processing methods, including discrete wavelet transform (DWT) [Figure 8B][27] or fast Fourier transform (FFT)[154], and advanced classifiers such as principal component analysis (PCA), SVM or KF can be utilized to estimate the slip state[155]. Recently, researchers also apply advanced learning algorithms such as LSTM and NN to predict slippage events accurately[156,157].

Dexterous operation and tool manipulation

The capacity to conduct dexterous or sophisticated manipulation tasks can significantly improve robotics’ intelligence and interaction ability, making them more similar to human beings. Although robotic hand grasping can satisfy most manipulation tasks, it is still not enough to thoroughly conduct complicated and humanoid daily tasks. Except for grasping, sometimes the robotics are required to perform other manipulating movements such as pushing, rolling, pivoting, pulling, and so on[17]. Bhattacharjee et al. inferred object hardness and velocity by pushing it with a tactile force array on the robotic arm

In addition to operating tasks using direct contact between the manipulator (tactile sensors) and the target object, it is also necessary for robotics to grab an external tool for specific manipulation tasks. Since human beings can extend operation capacity with various tools, robotics also greatly benefits from tool manipulation in multiple application scenarios such as industrial production, medical surgery, and domestic service. Compared with ordinary grasping or non-grasp-based operational tasks, tool manipulation is more difficult since the tactile devices should measure the contact events or forces between the tools and objects[17]. Hoffmann et al. controlled a robotic gripper to manipulate a pencil using tactile feedback [Figure 8D][163]. Other robotic tool manipulating works have also been reported, such as screw twisting[40], knife cutting[164] and so on. For the development of humanoid robots, the operated tools are more desired to be apart from the manipulator or tactile devices, and researchers are more recommended to extract features of contact events in the distance using advanced algorithms.

Human-machine interactions

Due to the inevitable uncertainty of working space caused by varied environmental parameters and other subjects (human beings or other robots), robotics are more desired to perform sophisticated manipulations and cope with changeable information, especially interactive missions with human beings. Human-machine interaction (HMI) is an information exchange process between human beings and robotics or sensing devices, which significantly contributes to the development of Tri-Co Robot (i.e., the Coexisting-Cooperative-Cognitive Robot)[165]. Based on the interaction level, HMI can be classified into human being parameters measuring, input interface for robotic controlling, and close-looped duplex interaction with sufficient feedback. This section discusses some recently reported works with different HMI applications.

Human being parameters monitoring

It is of significant priority to ensure that the robotics understand the characteristic parameters of the object, which is fundamental to conducting interactive activities. Since human beings are the central constituent part of most HMI tasks, numerous works monitoring human body parameters have been reported[4,18]. Health monitoring has long been a research emphasis in clinical medicine, and skin electronics detecting arterial pulse waves[166], body temperature[167], and wound damage[129] can provide a practical reference for patient-care and surgery robots. Wearable skin patches monitoring the composition of sweat[130] or exhaled air[168] also contribute to disease diagnosis and prevention. Recently, biocompatible and implantable sensing devices attached to internal organ surfaces have aroused wide interest[169]. They can capture physiological parameters such as electrocardiograph (ECG)[170] or electroencephalogram (EEG)[51], which help robotics to recognize the interactive target accurately.

In addition to the health state, human movement or activity information also serves as a critical reference for HMI tasks. Stretchable devices attached to the fingers, wrists, necks, limb joints, or heel tendons can easily tell the movement of key joints in the human body for posture identification[99,166,171-173]. Moreover, strain or vibration-sensitive skin patches covering faces, throats or muscles spread all over the body also help extract human emotion, voice information, or electromyography (EMG) signals[20,174,175]. Besides, some wearable interactive interface with advanced data processing algorithms shows great advantages in human motion or pose recognition[107]. Sundaram et al. developed a knitted tactile glove with numerous sensing units, and human hand gestures can be accurately identified using machine learning methods of CNN and t-SNE, as seen in Figure 9A[34].

Figure 9. Robotics for human-machine interactions (HMIs): (A) tactile glove resistive sensing array with high spatial resolution to recognize human grasping gestures (reproduced with permission[34]. Copyright 2019, Springer Nature); (B) human beings manipulate the high-resolution tactile sensor switch to control the robotic grasper for needle threading (reproduced with permission[30]. Copyright 2021, The American Association for the Advancement of Science); (C) close-looped control of the remote robotic hand to grasp a softball, human beings utilize the EMG signal to actuate the robotic hand, and the grasping force signal is reversed by electrical stimulator (reproduced with permission[54]. Copyright 2022, The American Association for the Advancement of Science); (D) digital-twin-based virtual shop based on VR devices and AI models (reproduced with permission[185]. Copyright 2021, John Wiley and Sons).

Input interface for robotic controlling

Once ensuring that the robots can receive and understand external information, it is determined by human manipulators to input interactive goals and control instruction to interact with robotics to complete specific manipulation tasks. Numerous flexible human-machine interfaces have been reported, such as traditional input devices, namely screen, mouse or keyboard[176], and wearable tactile systems, especially EMG skin patches[177] or intelligent data gloves[178]. Advanced algorithms such as CNN, Linear Discriminant Analysis (LDA), Root Mean Square (RMS), and confusion matrixes can assist in precisely extracting human instructions. These devices have been applicated to remote controlling of the mobile robot[179], wheelchair[180], robotic grasping manipulator[19], or virtual game character[181]. Yan et al. have reported a high-resolution magnetic tactile sensor array for the teleoperation of a robotic manipulator in Figure 9B[30]. NN algorithms can intelligently interpret human finger touch signals into grasper movement commands, which can even be used for a sophisticated task of needle threading. However, in most cases, robotics just functions as a receiving terminal for data processing and command execution without tactile feedback. Human manipulators can only observe the operation process and give instructions regardless of on-site perception and cognition, which makes it challenging to make corresponding adjustments according to the actual operation state of the robotics.

Close-looped duplex interaction

Ideal HMIs have capacities for multiple objects decision-making and duplex information exchanging between human manipulators and robotics, which can realize high-level interactive tasks with sophisticated manipulations. The robotics receive and process human instructions while making decisions autonomously, and the on-site situation can be conveyed to human beings through different tactile feedback mechanisms such as mechanical, thermal, or electrical stimulation[182]. Humans can get a vivid perception of the operation site, which serves as a practical reference for revising the input instructions. Cooperative missions between humans and robotics have long been reported[183], such as elderly attendant care[36] and intuitive teaching[22]. Tactile feedback also helps delicate control in the grasping manipulator. Yu et al. proposed a close-looped controlling task of the remote robotic hand to grasp a softball, as in Figure 9C[54]. Human manipulators utilize the EMG signal to actuate the robotic hand, and the multi-modal sensing information between the grasping hand and the object is conveyed through electrical stimulation on human arms. Similar works applied underwater[23] or in other extreme environments have also been reported. Recently, virtual reality (VR) and augmented reality (AR) technology have been reported to enable high-level HMI applications[8,184]. Sun et al. have reported a digital-twin-based virtual shop by leveraging the loT and AI analytics[185]. Human beings equipped with VR devices can achieve real-time feedback about the grasped product, which shows great potential for advanced HMI in virtual space, as shown in Figure 9D.

SUMMARY AND FUTURE PROSPECTS

In summary, tactile sensors and sensing technology can significantly improve the intellectual perception capacity of robotics, which have been applied in various robotic applications such as dexterous manipulation and human-machine interactions. We summarized tactile sensing principles, promising tactile sensors for robotics, and mainstream robotic application scenarios. Diverse structure designs of the pressure sensors, tri-axis sensors, large-scale sensing arrays, and multi-modal sensors were summarized in detail, which provided a helpful reference for tactile sensing devices structure design in robotics. On the other hand, different robotic applications, including object properties recognition, grasping and manipulation, and human-machine interactions, were thoroughly discussed, and it may be heuristic for the development of the next generation of intelligent robotics in the future.

Although tactile sensing technology for robotic applications has achieved remarkable progress, there still remain some challenges to be addressed. For object properties recognition, it is sometimes time-consuming to extract feature information, and researchers are always pursuing advanced algorithms and multi-modal sensors for fast and accurate recognition. Besides, the grasping and manipulation task calls for advanced motion control algorithms to acquire high-efficient operation, and both force feedback and slippage detection should be conducted instantaneously. Finally, for the ideal human-machine interactions, the data processing should be conducted on site, and multiple feedback information is more desired. However, recent works show limited data processing speed and less realistic feedback. Therefore, we have summarized some improvements that can be achieved in the future:

(1) Large-scale tactile sensor array with high spatial resolution for robotics still needs to be investigated. To achieve haptic perception capacity similar to human beings, the tactile sensing array is not only required to cover all over the robotic body but also owes high spatial resolution for subtle stimuli measurement. Although high spatial resolution sensor arrays realized by ML algorithms[30] and full-body electronic skin[22] have been reported, a large-scale sensing array with high or scalable spatial resolution needs to be further investigated. Besides, numerous signals generated by a large amount of sensing units also cause a significant challenge to the ancillary data-collecting devices, which calls for great efforts to solve problems such as wireless transmission and crosstalk elimination.

(2) Multi-modal tactile sensors play an essential role in robotic tactile perception. Multi-modal tactile devices have long been reported, but a trade-off exists between structural complexity and modality amount. It is of great significance to develop simple structured tactile sensors sensitive to multiple physical or chemical parameters, and multi-modal tri-axis force sensor is also less reported before[119]. In addition, since there exists crosstalk between different modalities, decoupling technology selecting various materials and structures or signal revising can greatly contribute to precise measuring.

(3) Advanced data processing algorithms, especially machine learning, can help analyze numerous tactile signals. Data-drive signal processing methods such as NN, SVM or PCA can extract high-dimensional features from large-scale datasets through intensive model training, which renders the robotics to be intelligent enough for advanced applications, but most data processing processes are executed offline. To achieve immediate interaction, it is necessary to do online or even in-site data processing to improve the analyzing speed. Besides, the advanced algorithm for multi-data fusion shows great advantages in extra feature extraction, and it can achieve more accurate sensing when blending various data of tactile, vision or voice if the problems of data source mismatch can be addressed.

(4) High-level human-machine interactions are always the target application for robotics. Advanced data processing methods, especially machine learning, can help extract high-dimensional and meaningful data from simple interactive movements, which enhance the intelligence of robotics to make autonomous decisions. Although various feedback principles such as mechanical, thermal or electrical stimulation have been reported, it is not easy to precisely simulate the interactive parameters on site. Feedback combing tactile, vision, voice and other information shows considerable research value. In addition, remote control of robotics in extreme environments, namely underwater, aerospace or some disaster sites, is probably promising HMIs applications in the future.

DECLARATIONS

Authors’ contributionsWrote the manuscript: Jin J, Wang S, Zhang Z

Revised the manuscript: Jin J, Wang S, Mei D, Wang Y

Availability of data and materialsNot applicable.

Financial support and sponsorshipThis work is supported in part by the National Natural Science Foundation of China (52175522), Key Research and Development Program of Zhejiang Province (2022C01041), Fundamental Research Funds for the Central Universities (2022FZZX01-06) and Science Foundation of Donghai Laboratory (DH-2022KF01002).

Conflicts of interestAll authors declared that there are no conflicts of interest.

Ethical approval and consent to participateNot applicable.

Consent for publicationNot applicable.

Copyright© The Author(s) 2023

REFERENCES

1. Dargahi J, Najarian S. Human tactile perception as a standard for artificial tactile sensing - a review. Int J Med Robot Comput Assist Surg 2004;1:23-5.

2. Lee Y, Park J, Choe A, Cho S, Kim J, Ko H. Mimicking human and biological skins for multifunctional skin electronics. Adv Funct Mater 2020;30:1904523.

3. Navarro SE, Muhlbacher-karrer S, Alagi H, et al. Proximity perception in human-centered robotics: a survey on sensing systems and applications. IEEE Trans Robot 2022;38:1599-620.

4. Gao Y, Yu L, Yeo JC, Lim CT. Flexible hybrid sensors for health monitoring: materials and mechanisms to render wearability. Adv Mater 2020;32:e1902133.

5. Romeo RA, Zollo L. Methods and sensors for slip detection in robotics: a survey. IEEE Access 2020;8:73027-50.

6. Yamaguchi A, Atkeson CG. Recent progress in tactile sensing and sensors for robotic manipulation: can we turn tactile sensing into vision? Adv Robot 2019;33:661-73.

7. Luo S, Bimbo J, Dahiya R, Liu H. Robotic tactile perception of object properties: a review. Mechatronics 2017;48:54-67.

8. Sun Z, Zhu M, Shan X, Lee C. Augmented tactile-perception and haptic-feedback rings as human-machine interfaces aiming for immersive interactions. Nat Commun 2022;13:5224.

9. Pyo S, Lee J, Bae K, Sim S, Kim J. Recent progress in flexible tactile sensors for human-interactive systems: from sensors to advanced applications. Adv Mater 2021;33:e2005902.

10. Nie B, Geng J, Yao T, et al. Sensing arbitrary contact forces with a flexible porous dielectric elastomer. Mater Horiz 2021;8:962-71.

11. Duan Y, He S, Wu J, Su B, Wang Y. Recent progress in flexible pressure sensor arrays. Nanomaterials 2022;12:2495.

12. Duan S, Shi Q, Wu J. Multimodal sensors and ML-based data fusion for advanced robots. Adv Intell Syst 2022;4:2200213.

13. Yuan Z, Han S, Gao W, Pan C. Flexible and stretchable strategies for electronic skins: materials, structure, and integration. ACS Appl Electron Mater 2022;4:1-26.

14. Ruth SRA, Feig VR, Tran H, Bao Z. Microengineering pressure sensor active layers for improved performance. Adv Funct Mater 2020;30:2003491.

15. Zhang F, Jin T, Xue Z, Zhang Y. Recent progress in three-dimensional flexible physical sensors. Int J Smart Nano Mater 2022;13:17-41.

16. Yousef H, Boukallel M, Althoefer K. Tactile sensing for dexterous in-hand manipulation in robotics - a review. Sens Actuator A Phys 2011;167:171-87.

17. Li Q, Kroemer O, Su Z, Veiga FF, Kaboli M, Ritter HJ. A review of tactile information: perception and action through touch. IEEE Trans Robot 2020;36:1619-34.

18. Trung TQ, Lee NE. Flexible and stretchable physical sensor integrated platforms for wearable human-activity monitoringand personal healthcare. Adv Mater 2016;28:4338-72.

19. Tang L, Shang J, Jiang X. Multilayered electronic transfer tattoo that can enable the crease amplification effect. Sci Adv 2021;7:eabe3778.

20. Gu G, Zhang N, Xu H, et al. A soft neuroprosthetic hand providing simultaneous myoelectric control and tactile feedback. Nat Biomed Eng 2021.

21. Patel S, Ershad F, Zhao M, et al. Wearable electronics for skin wound monitoring and healing. Soft Sci 2022;2:9.

22. Cheng G, Dean-leon E, Bergner F, Rogelio Guadarrama Olvera J, Leboutet Q, Mittendorfer P. A comprehensive realization of robot skin: sensors, sensing, control, and applications. Proc IEEE 2019;107:2034-51.

23. Khatib O, Yeh X, Brantner G, et al. Ocean one: a robotic avatar for oceanic discovery. IEEE Robot Automat Mag 2016;23:20-9.

24. Xu DF, Loeb GE, Fishel JA. Tactile identification of objects using Bayesian exploration. In Proceedings of the ICRA 2013: IEEE International Conference on Robotics and Automation, 6-10 May 2013; Karlsruhe, Germany; pp.3056-61.

25. Li J, Bao R, Tao J, Peng Y, Pan C. Recent progress in flexible pressure sensor arrays: from design to applications. J Mater Chem C 2018;6:11878-92.

26. Wang S, Wang C, Lin Q, et al. Flexible three-dimensional force sensor of high sensing stability with bonding and supporting composite structure for smart devices. Smart Mater Struct 2021;30:105004.

27. Wang Y, Wu X, Mei D, Zhu L, Chen J. Flexible tactile sensor array for distributed tactile sensing and slip detection in robotic hand grasping. Sens Actuator A Phys 2019;297:111512.

28. Guo Y, Wei X, Gao S, Yue W, Li Y, Shen G. Recent advances in carbon material-based multifunctional sensors and their applications in electronic skin systems. Adv Funct Mater 2021;31:2104288.

29. Li G, Liu S, Wang L, Zhu R. Skin-inspired quadruple tactile sensors integrated on a robot hand enable object recognition. Sci Robot 2020;5:eabc8134.

30. Yan Y, Hu Z, Yang Z, et al. Soft magnetic skin for super-resolution tactile sensing with force self-decoupling. Sci Robot 2021;6:eabc8801.

31. Soni M, Dahiya R. Soft eSkin: distributed touch sensing with harmonized energy and computing. Philos Trans A Math Phys Eng Sci 2020;378:20190156.

32. Lee Y, Park J, Cho S, et al. Flexible ferroelectric sensors with ultrahigh pressure sensitivity and linear response over exceptionally broad pressure range. ACS Nano 2018;12:4045-54.

33. Jiang X, Chen R, Zhu H. Recent progress in wearable tactile sensors combined with algorithms based on machine learning and signal processing. APL Mater 2021;9:030906.

34. Sundaram S, Kellnhofer P, Li Y, Zhu JY, Torralba A, Matusik W. Learning the signatures of the human grasp using a scalable tactile glove. Nature 2019;569:698-702.

35. Pressure profile systems®. The TactArray - pressure mapping sensor pads. Available from: https://pressureprofile.com/sensors/tactarray [Last accessed on 8 Mar 2023].

36. Iwata H, Sugano S. Design of human symbiotic robot TWENDY-ONE. In Proceedings of the ICRA 2009: IEEE International Conference on Robotics and Automation; 12-17 May 2009; Kobe, Japan; p. 3294.

37. Someya T, Sekitani T, Iba S, Kato Y, Kawaguchi H, Sakurai T. A large-area, flexible pressure sensor matrix with organic field-effect transistors for artificial skin applications. Proc Natl Acad Sci USA 2004;101:9966-70.

38. SynTouch Inc. SynTouch® BioTac® Tactile sensor. Available from: https://syntouchinc.com/sensor-documents/ [Last accessed on 8 Mar 2023].

39. Fishel JA, Loeb GE. Bayesian exploration for intelligent identification of textures. Front Neurorobot 2012;6:4.

40. Su Z, Kroemer O, Loeb GE, Sukhatme GS, Schaal S. Learning manipulation graphs from demonstrations using multimodal sensory signals. In Proceedings of the ICRA 2018: IEEE International Conference on Robotics and Automation; 21-25 May 2018; Brisbane, QLD, Australia; pp. 2758-65.

41. Yuan W, Dong S, Adelson EH. GelSight: High-resolution robot tactile sensors for estimating geometry and force. Sensors 2017;17:2762.

42. Li R, Platt R, Yuan WZ, et al. Localization and manipulation of small parts using GelSight tactile sensing. In Proceedings of the IROS 2014: IEEE/RSJ International Conference on Intelligent Robots and Systems; 14-18 September 2014; Chicago, IL, USA; pp. 3988-93.

43. Yuan W, Zhu C, Owens A, Srinivasan MA, Adelson EH. Shape-independent hardness estimation using deep learning and a GelSight tactile sensor. In Proceedings of the ICRA 2017: IEEE International Conference on Robotics and Automation; 29 May-3 June 2017; Singapore; pp. 951-8.

44. Mannsfeld SC, Tee BC, Stoltenberg RM, et al. Highly sensitive flexible pressure sensors with microstructured rubber dielectric layers. Nat Mater 2010;9:859-64.

45. OnRobot. OMD-20-SE-40N DATASHEET. Available from: https://www.g4.com.tw/userfiles/files/Datasheet/onrobot_3d_force_sensor_omd_20_se_40n.pdf [Last accessed on 8 Mar 2023].

46. Yao KP, Kaboli M, Cheng G. Tactile-based object center of mass exploration and discrimination. In Proceedings of the humanoids 2017: IEEE-RAS 17th International Conference on Humanoid Robotics; 15-17 November 2017; Birmingham, UK; pp.876-81.

48. Tenzer Y, Jentoft LP, Howe RD. The feel of MEMS barometers: inexpensive and easily customized tactile array sensors. IEEE Robot Automat Mag 2014;21:89-95.

49. Ades C, Gonzalez I, AlSaidi M, et al. Robotic finger force sensor fabrication and evaluation through a glove. Proc Fla Conf Recent Adv Robot 2018;2018:60-65.

50. Lin L, Xie Y, Wang S, et al. Triboelectric active sensor array for self-powered static and dynamic pressure detection and tactile imaging. ACS Nano 2013;7:8266-74.

51. Kim J, Lee M, Shim HJ, et al. Stretchable silicon nanoribbon electronics for skin prosthesis. Nat Commun 2014;5:5747.

52. Boutry CM, Negre M, Jorda M, et al. A hierarchically patterned, bioinspired e-skin able to detect the direction of applied pressure for robotics. Sci Robot 2018;3:eaau6914.

53. Sim K, Rao Z, Zou Z, et al. Metal oxide semiconductor nanomembrane-based soft unnoticeable multifunctional electronics for wearable human-machine interfaces. Sci Adv 2019;5:eaav9653.

54. Yu Y, Li J, Solomon SA, et al. All-printed soft human-machine interface for robotic physicochemical sensing. Sci Robot 2022;7:eabn0495.

55. Yancheng W, Yingtong L, Wen D, Deqing M. Recent progress on three-dimensional printing processes to fabricate flexible tactile sensors. Chin J Mech Eng 2020;56:239.

56. Wang C, Dong L, Peng D, Pan C. Tactile sensors for advanced intelligent systems. Adv Intell Syst 2019;1:1900090.

57. Zhu J, Zhou C, Zhang M. Recent progress in flexible tactile sensor systems: from design to application. Soft Sci 2021;1:3.

58. Wang Y, Zhu L, Mei D, Zhu W. A highly flexible tactile sensor with an interlocked truncated sawtooth structure based on stretchable graphene/silver/silicone rubber composites. J Mater Chem C 2019;7:8669-79.

59. Park J, Lee Y, Hong J, et al. Giant tunneling piezoresistance of composite elastomers with interlocked microdome arrays for ultrasensitive and multimodal electronic skins. ACS Nano 2014;8:4689-97.

60. Zhang J, Zhou LJ, Zhang HM, et al. Highly sensitive flexible three-axis tactile sensors based on the interface contact resistance of microstructured graphene. Nanoscale 2018;10:7387-95.

61. Qin J, Yin LJ, Hao YN, et al. Flexible and stretchable capacitive sensors with different microstructures. Adv Mater 2021;33:e2008267.

62. Viry L, Levi A, Totaro M, et al. Flexible three-axial force sensor for soft and highly sensitive artificial touch. Adv Mater 2014;26:2659-64.

63. Wan Y, Wang Y, Guo CF. Recent progresses on flexible tactile sensors. Mater Today Phys 2017;1:61-73.

64. Pan M, Yuan C, Liang X, Zou J, Zhang Y, Bowen C. Triboelectric and piezoelectric nanogenerators for future soft robots and machines. iScience 2020;23:101682.

65. Wu W, Wen X, Wang ZL. Taxel-addressable matrix of vertical-nanowire piezotronic transistors for active and adaptive tactile imaging. Science 2013;340:952-7.

66. Yan Y, Hu Z, Shen Y, Pan J. Surface texture recognition by deep learning-enhanced tactile sensing. Adv Intell Syst 2022;4:2100076.

67. Ge J, Wang X, Drack M, et al. A bimodal soft electronic skin for tactile and touchless interaction in real time. Nat Commun 2019;10:4405.

68. Kawasetsu T, Horii T, Ishihara H, Asada M. Flexible tri-axis tactile sensor using spiral inductor and magnetorheological elastomer. IEEE Sensors J 2018;18:5834-41.

69. Wang H, de Boer G, Kow J, et al. Design methodology for magnetic field-based soft tri-axis tactile sensors. Sensors 2016;16:1356.

70. Jiang C, Zhang Z, Pan J, Wang Y, Zhang L, Tong L. Finger-skin-inspired flexible optical sensor for force sensing and slip detection in robotic grasping. Adv Mater Technol 2021;6:2100285.

71. D’Abbraccio J, Aliperta A, Oddo CM et al. Design and development of large-area fbg-based sensing skin for collaborative robotics. In Proceedings of the METROIND4.0&IOT 2019: IEEE International Workshop on Metrology for Industry 4.0 and Internet of Things; 04-06 June 2019; Naples, Italy; pp.410-3.

72. Wang C, Qi B, Lin M, et al. Continuous monitoring of deep-tissue haemodynamics with stretchable ultrasonic phased arrays. Nat Biomed Eng 2021;5:749-58.

73. Deng W, Deng L, Hu Y, Zhang Y, Chen G. Thermoelectric and mechanical performances of ionic liquid-modulated PEDOT:PSS/SWCNT composites at high temperatures. Soft Sci 2022;1:14.

74. Park C, Kim MS, Kim HH, et al. Stretchable conductive nanocomposites and their applications in wearable devices. Appl Phys Rev 2022;9:021312.

75. Wang X, Dong L, Zhang H, Yu R, Pan C, Wang ZL. Recent progress in electronic skin. Adv Sci 2015;2:1500169.

76. Kaltenbrunner M, Sekitani T, Reeder J, et al. An ultra-lightweight design for imperceptible plastic electronics. Nature 2013;499:458-63.

77. Tien NT, Jeon S, Kim DI, et al. A flexible bimodal sensor array for simultaneous sensing of pressure and temperature. Adv Mater 2014;26:796-804.

78. Lipomi DJ, Vosgueritchian M, Tee BC, et al. Skin-like pressure and strain sensors based on transparent elastic films of carbon nanotubes. Nat Nanotechnol 2011;6:788-92.

79. Lee JH, Heo JS, Kim YJ, et al. A behavior-learned cross-reactive sensor matrix for intelligent skin perception. Adv Mater 2020;32:e2000969.

80. Ruth SRA, Beker L, Tran H, Feig VR, Matsuhisa N, Bao Z. Rational design of capacitive pressure sensors based on pyramidal microstructures for specialized monitoring of biosignals. Adv Funct Mater 2020;30:1903100.

81. Lee Y, Myoung J, Cho S, et al. Bioinspired gradient conductivity and stiffness for ultrasensitive electronic skins. ACS Nano 2021;15:1795-804.

82. Yang J, Luo S, Zhou X, et al. Flexible, tunable, and ultrasensitive capacitive pressure sensor with microconformal graphene electrodes. ACS Appl Mater Interfaces 2019;11:14997-5006.

83. Liu W, Liu N, Yue Y, et al. Piezoresistive pressure sensor based on synergistical innerconnect polyvinyl alcohol nanowires/wrinkled graphene film. Small 2018;14:e1704149.

84. Wang Y, Chen Z, Mei D, Zhu L, Wang S, Fu X. Highly sensitive and flexible tactile sensor with truncated pyramid-shaped porous graphene/silicone rubber composites for human motion detection. Compos Sci Technol 2022;217:109078.

85. Ha M, Lim S, Cho S, et al. Skin-inspired hierarchical polymer architectures with gradient stiffness for spacer-free, ultrathin, and highly sensitive triboelectric sensors. ACS Nano 2018;12:3964-74.

86. Wu Y, Liu Y, Zhou Y, et al. A skin-inspired tactile sensor for smart prosthetics. Sci Robot 2018;3:eaat0429.

87. Pan J, Jiang C, Zhang Z, Zhang L, Wang X, Tong L. Flexible liquid-filled fiber adapter enabled wearable optical sensors. Adv Mater Technol 2020;5:2000079.

88. Zhu L, Wang Y, Mei D, Ding W, Jiang C, Lu Y. Fully elastomeric fingerprint-shaped electronic skin based on tunable patterned graphene/silver nanocomposites. ACS Appl Mater Interfaces 2020;12:31725-37.

89. Xiong J, Chen J, Lee PS. Functional fibers and fabrics for soft robotics, wearables, and human-robot interface. Adv Mater 2021;33:e2002640.

90. Okatani T, Takahashi H, Noda K, Takahata T, Matsumoto K, Shimoyama I. A tactile sensor using piezoresistive beams for detection of the coefficient of static friction. Sensors 2016;16:718.

91. Cao D, Hu J, Li Y, Wang S, Liu H. Polymer-based optical waveguide triaxial tactile sensing for 3-dimensional curved shell. IEEE Robot Autom Lett 2022;7:3443-50.

92. Cheng X, Gong Y, Liu Y, Wu Z, Hu X. Flexible tactile sensors for dynamic triaxial force measurement based on piezoelectric elastomer. Smart Mater Struct 2020;29:075007.

93. Lee JI, Pyo S, Kim MO, Kim J. Multidirectional flexible force sensors based on confined, self-adjusting carbon nanotube arrays. Nanotechnology 2018;29:055501.

94. Liang G, Wang Y, Mei D, Xi K, Chen Z. Flexible capacitive tactile sensor array with truncated pyramids as dielectric layer for three-axis force measurement. J Microelectromech Syst 2015;24:1510-9.

95. Wang Z, Sun S, Li N, Yao T, Lv D. Triboelectric self-powered three-dimensional tactile sensor. IEEE Access 2020;8:172076-85.

96. Zhao XF, Hang CZ, Wen XH, et al. Ultrahigh-sensitive finlike double-sided e-skin for force direction detection. ACS Appl Mater Interfaces 2020;12:14136-44.

97. Chen S, Bai C, Zhang C, et al. Flexible piezoresistive three-dimensional force sensor based on interlocked structures. Sens Actuator A Phys 2021;330:112857.

98. Xu K, Fujita Y, Lu Y, et al. A wearable body condition sensor system with wireless feedback alarm functions. Adv Mater 2021;33:e2008701.

99. Wang Z, Bi P, Yang Y, et al. Star-nose-inspired multi-mode sensor for anisotropic motion monitoring. Nano Energy 2021;80:105559.

100. Zhang S, Suresh L, Yang J, Zhang X, Tan SC. Augmenting sensor performance with machine learning towards smart wearable sensing electronic systems. Adv Intell Syst 2022;4:2100194.

101. Takei K, Takahashi T, Ho JC, et al. Nanowire active-matrix circuitry for low-voltage macroscale artificial skin. Nat Mater 2010;9:821-6.

102. Zheng YQ, Liu Y, Zhong D, et al. Monolithic optical microlithography of high-density elastic circuits. Science 2021;373:88-94.

103. Sun H, Martius G. Guiding the design of superresolution tactile skins with taxel value isolines theory. Sci Robot 2022;7:eabm0608.

104. Ye J, Zhang F, Shen Z, et al. Tunable seesaw-like 3D capacitive sensor for force and acceleration sensing. NPJ Flex Electron 2021:5.

105. Wang S, Wang Y, Chen Z, Mei D. Kirigami design of flexible and conformal tactile sensor on sphere-shaped surface for contact force sensing. Adv Mater Technol 2023;8:2200993.

107. Luo Y, Li Y, Sharma P, et al. Learning human-environment interactions using conformal tactile textiles. Nat Electron 2021;4:193-201.

108. Yang T, Xie D, Li Z, Zhu H. Recent advances in wearable tactile sensors: materials, sensing mechanisms, and device performance. Mater Sci Eng R Rep 2017;115:1-37.

109. Park J, Kim M, Lee Y, Lee HS, Ko H. Fingertip skin-inspired microstructured ferroelectric skins discriminate static/dynamic pressure and temperature stimuli. Sci Adv 2015;1:e1500661.

110. Zhang F, Zang Y, Huang D, Di CA, Zhu D. Flexible and self-powered temperature-pressure dual-parameter sensors using microstructure-frame-supported organic thermoelectric materials. Nat Commun 2015;6:8356.

111. You I, Mackanic DG, Matsuhisa N, et al. Artificial multimodal receptors based on ion relaxation dynamics. Science 2020;370:961-5.

112. Pang C, Lee GY, Kim TI, et al. A flexible and highly sensitive strain-gauge sensor using reversible interlocking of nanofibres. Nat Mater 2012;11:795-801.

113. Park J, Lee Y, Hong J, et al. Tactile-direction-sensitive and stretchable electronic skins based on human-skin-inspired interlocked microstructures. ACS Nano 2014;8:12020-9.

114. Park S, Kim H, Vosgueritchian M, et al. Stretchable energy-harvesting tactile electronic skin capable of differentiating multiple mechanical stimuli modes. Adv Mater 2014;26:7324-32.

115. Zhu L, Wang Y, Mei D, et al. Large-area hand-covering elastomeric electronic skin sensor with distributed multifunctional sensing capability. Adv Intell Syst 2022;4:2100118.

116. Jo H, An S, Kwon HJ, Yarin AL, Yoon SS. Transparent body-attachable multifunctional pressure, thermal, and proximity sensor and heater. Sci Rep 2020;10:2701.

117. Yang R, Zhang W, Tiwari N, Yan H, Li T, Cheng H. Multimodal sensors with decoupled sensing mechanisms. Adv Sci 2022;9:e2202470.

118. Someya T, Kato Y, Sekitani T, et al. Conformable, flexible, large-area networks of pressure and thermal sensors with organic transistor active matrixes. Proc Natl Acad Sci USA 2005;102:12321-5.

119. Harada S, Kanao K, Yamamoto Y, Arie T, Akita S, Takei K. Fully printed flexible fingerprint-like three-axis tactile and slip force and temperature sensors for artificial skin. ACS Nano 2014;8:12851-7.

120. Yu P, Liu W, Gu C, Cheng X, Fu X. Flexible piezoelectric tactile sensor array for dynamic three-axis force measurement. Sensors 2016;16:819.

121. Ho DH, Sun Q, Kim SY, Han JT, Kim DH, Cho JH. Stretchable and multimodal all graphene electronic skin. Adv Mater 2016;28:2601-8.

122. Hua Q, Sun J, Liu H, et al. Skin-inspired highly stretchable and conformable matrix networks for multifunctional sensing. Nat Commun 2018;9:244.

123. Rashid M, Khan MA, Alhaisoni M, et al. A sustainable deep learning framework for object recognition using multi-layers deep features fusion and selection. Sustainability 2020;12:5037.

124. Wang Y, Chen J, Mei D. Flexible tactile sensor array for slippage and grooved surface recognition in sliding movement. Micromachines 2019;10:579.

125. Cao G, Zhou Y, Bollegala D, Luo S. Spatio-temporal attention model for tactile texture recognition. In Proceedings of the IROS 2020: IEEE/RSJ International Conference on Intelligent Robots and Systems; 25-29 October 2020; Las Vegas, Nevada, USA; pp. 9896-902.

126. Drimus A, Kootstra G, Bilberg A, Kragic D. Design of a flexible tactile sensor for classification of rigid and deformable objects. Robot Auton Syst 2014;62:3-15.

127. Cui Z, Wang W, Guo L, et al. Haptically quantifying young’s modulus of soft materials using a self-locked stretchable strain sensor. Adv Mater 2022;34:e2104078.

128. Kerr E, McGinnity TM, Coleman S. Material classification based on thermal properties - a robot and human evaluation. In Proceedings of the ROBIO 2013: IEEE International Conference on Robotics and Biomimetics; 12-14 December 2013; Shenzhen, China; pp.1048-53.

129. Hattori Y, Falgout L, Lee W, et al. Multifunctional skin-like electronics for quantitative, clinical monitoring of cutaneous wound healing. Adv Healthc Mater 2014;3:1597-607.

130. Gao W, Emaminejad S, Nyein HYY, et al. Fully integrated wearable sensor arrays for multiplexed in situ perspiration analysis. Nature 2016;529:509-14.

131. Seok D, Kim YB, Kim U, Lee SY, Choi HR. Compensation of environmental influences on sensorized-forceps for practical surgical tasks. IEEE Robot Autom Lett 2019;4:2031-7.